Titans, a new model architecture

Table of Contents

Even given the steady stream of new ML research, recently Google Research released something that stands out – a new model architecture, Titans.

It will take some time to see how much impact the Titans architecture will have, but it’s a fascinating concept.

The lay of the land #

Before jumping into what Titans are, let’s set some context.

Titans are a new approach to an old problem – processing sequential data to do something with it, like generating new sequences or outputting a prediction. Nowadays, sequence modeling is most commonly used to generate new text after being fed natural language input. This is the base use case behind generative AI.

Existing architectures #

So far, sequence models have been based on either recurrent neural networks (RNNs) or Transformers.

RNNs

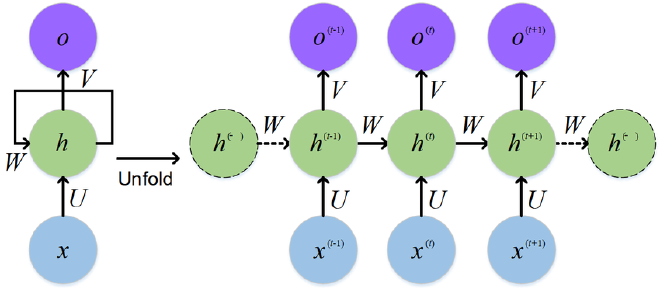

An RNN processes inputs sequentially, and as it does so, keeps track of a hidden state to “remember” relevant past information. At each step, the model uses the input and the hidden state to generate the output, along with an updated hidden state.

Standard RNN 1

Transformers

The Transformer architecture relies on the attention mechanism to weigh parts of the input sequence differently as it processes each input element. This attention mechanism helps the model capture relevant parts of the input sequence without needing a hidden state. It turns out this is highly parallelizable and is also great at preserving long-range dependencies.

We are currently in the era of the Transformer architecture. All the major LLMs are Transformer-based, and there have been a lot of advances in this space.

Original Transformer 2

What is the Titans architecture? #

Components #

The Titans architecture combines three modules: core, long-term memory, and persistent memory.

Core module

The main data processing happens in this module, but it only captures short-term dependencies. It relies on the attention mechanism, similar to the Transformer.

Long-term memory module

This module stores and retrieves historical information.

Persistent memory module

This module encodes learnable, data-independent parameters that capture task-specific knowledge.

Surprise #

One of the key features in this architecture is the surprise metric. Surprise is a calculation that quantifies how different the current input data is from previously seen data.

The model achieves long-term memory by compressing historical information into just the most important events. The surprise metric informs which events are important enough to retain.

Intuitively, this is similar to how human memory works. The events we remember from long ago tend to be those that were different from our day-to-day expectations.

The Titans implementation enhances the surprise metric by dividing it into two parts:

- Past surprise: how surprising the recent past was

- Momentary surprise: how surprising the current input is

Design variants #

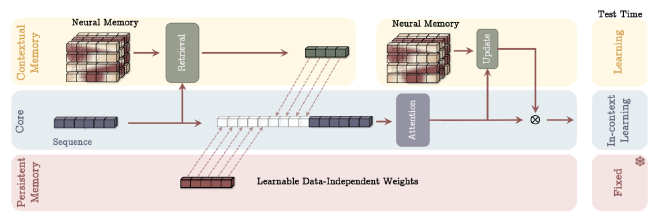

Memory as a context (MAC)

The long-term memory module is used to power the current context. It divides the input sequence into segments and pulls relevant information for each segment from long-term memory. The input segment, retrieved historical data, and persistent memory parameters are all then fed into the attention module.

The output of the attention module is also used to update the long-term memory, providing in-context learning.

MAC architecture 3

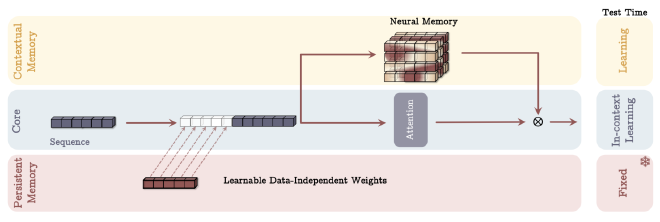

Memory as a gate (MAG)

There are two branches in this variant: one branch for the input data to update the long-term memory, and one branch that applies attention over the input data using a sliding window (not as segments).

The output is combined from both branches, blending precise short-term memory with fading long-term memory

MAG architecture 3

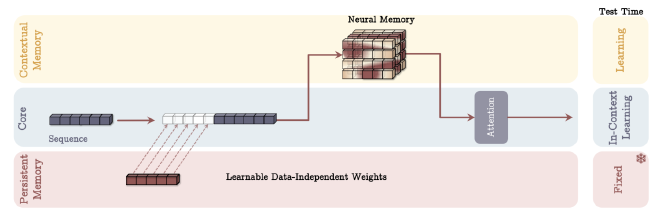

Memory as a layer (MAL)

This variant takes the input data, processes it with the persistent memory parameters, retrieves the long-term memory, and then on that output applies sliding-window attention.

Essentially, this becomes a memory layer thatcompresses historical and current context.

MAL architecture 3

Why are Titans important? #

What’s wrong with Transformers? #

-

Scaling 3: Transformers scale at quadratic cost as the context length increases. Plus, it becomes too expensive financially to use them this way, without equivalent benefit. Although there have been attempts to make Transformers more scalable, such as with Linear Transformers4, this has not been drastically successful.

-

Effective context 5: Even if a Transformer is used with long contexts, its performance suffers. As the sequence length increases, the model is less able to retrieve the relevant information.

Strengths of Titans #

-

Perform better with long contexts: As shown in the research (namely the needle-in-a-haystack metric), the Titans architecture retrieves relevant information and scales well with long contexts.

-

Resemble human memory better: The surprise-based long-term memory is similar to how humans remember past events. This could make working with these kinds of models more intuitive for humans.

-

Architecture is flexible: as the research illustrates, there are three variants of the architecture, each with its own tradeoffs. It seems possible to rearrange the key modules of the architecture for different applications.

Closing thoughts #

There are still a lot of open questions and unknowns about Titans, but the research is promising. It’ll be interesting to see how the architecture performs in the real world and what challenges it will face.

-

Feng, Weijiang & Guan, Naiyang & Li, Yuan & Zhang, Xiang & Luo, Zhigang. (2017). Audio visual speech recognition with multimodal recurrent neural networks. 681-688. 10.1109/IJCNN.2017.7965918. ↩︎

-

Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention ↩︎

-

Cheng-Ping Hsieh, et al, “RULER: What’s the Real Context Size of Your Long-Context Language Models?,” in First Conference on Language Modeling, 2024. ↩︎